2nd May 2022

Limits to Virality

Mindshare’s Culture Vulture is an annual trends report from our Customer Strategy team that identifies the macro trends pervading U.S. culture and how they impact the marketing and communications strategies for clients.

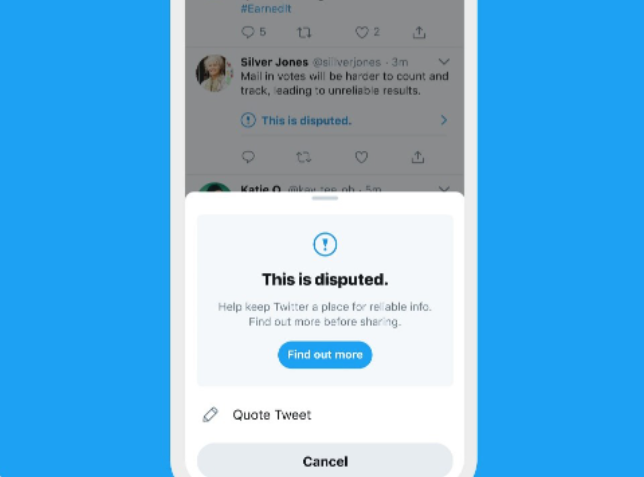

Ahead of the contentious 2020 elections, Twitter enacted a myriad of new changes to their platform to slow down how people shared information. From forcing users to click into links before sharing and prompting users to provide comments with retweets, Twitter wanted to add friction to the sharing of information. In 2020, WhatsApp also introduced new restrictions on the platform where users can only send frequently forwarded message to one person at a time, down from five previously. And in 2018, YouTube launched their fact-checking panels to combat misinformation on sensitive topics, and expanded that service in 2020 to include more 3rd-party sites.

One of the many issues that people have with social media platforms is the proliferation of misinformation, which can be particularly dangerous when it comes to politics and medicine. Misinformation is much ‘stickier’ than the truth, and once shared online, it can be difficult to remove or convince people of the actual truth. Americans are particularly reluctant to action against this, as the idea of free speech has long been ingrained in our psyche--but things are starting to change.

Vigilantism and retributive harassment are particular dangerous online. In the midst of the search for culprit of the Boston bombings, Reddit and other online communities misidentified several innocent people, including Sunil Tripathi, who committed suicide after falsely being named a primary suspect. Even when the person is guilty of the transgression, people often pile on far beyond what is appropriate. Internet justice can quickly move past harassment to more nefarious actions like doxing and swatting.

In their early years, social media platforms took a hands-off approach to policing speech, allowing things to run their natural course. But new scrutiny and public backlash are now forcing many to stop them before they can be shared too broadly. Human moderators and AI are part of the solutions, but as with many censoring techniques, can become heavy handed and block legitimate content. These platforms have to walk a fine line between banning speech and protecting their users, especially when that speech falls between political lines.

The internet’s ability to amplify voices has been instrumental in giving minority voices the power to enact just changes, but that same power has also been used to silence dissenting opinions and spread misinformation.

Not everyone is happy that these platforms are curbing what they can say and share. Free speech absolutists like Elon Musk believe that these companies are too restrictive and should align more closely to the principles of the first amendment. The recent crackdowns and removal of certain public figures have helped alternative platforms like Parler gain a larger following. Many are worried that Musk’s takeover of Twitter may reverse many of their policies and turn the platform into a free-for-all, and advertisers are also afraid what the potential lack of regulations might mean for brand safety.

Brands that once have played into viral fads to earn views and seem on-trend are now thinking twice about jumping into these fleeting moments. Too many brands have reacted to viral moments, only to have it backfire on them. Brand misalignment, the company’s own missteps, and additional context after the fact can turn the reactionary campaign sour. FOMO once forced brands into acting on these crazes, but now they’ve become more reflective.

Key Implications for Brands:

- Now that social media has been around long enough for people to see its effects on society, user engagement on these platforms are changing to reflect this new awareness of the potential dangers to their mental health and of misinformation. Many sites are also changing the user experience to combat these dangers, but the effects of these changes have hindered and even pushed away users to other platforms.

- Certain memes and trends help signify in-group knowledge of niche audiences. Thorough understanding of what they mean to these groups can help endear your brands to them, but a half-baked attempt may end in folly.

- Brands were once quick to jump into trends and memes to capitalize on the conversations, but users have become more aware of an impact that a mob can have on individuals thrusted into the limelight. Brands need to understand if joining a viral trend is helping promote a worthy cause or piling onto an already overwrought issue.